Chatbot that presented lousy guidance for taking in issues taken down : Photographs

[ad_1]

Tessa was a chatbot originally built by scientists to enable protect against taking in conditions. The National Taking in Issues Association experienced hoped Tessa would be a resource for individuals in search of details, but the chatbot was taken down when artificial intelligence-related capabilities, added later on on, triggered the chatbot to give body weight decline advice.

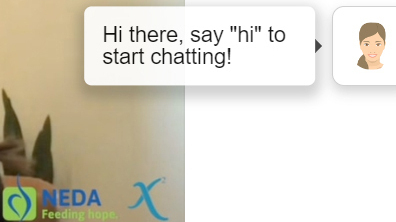

Screengrab

conceal caption

toggle caption

Screengrab

A several months ago, Sharon Maxwell listened to the National Having Issues Association (NEDA) was shutting down its extended-functioning nationwide helpline and endorsing a chatbot referred to as Tessa as a “a meaningful avoidance source” for these battling with consuming issues. She made the decision to try out the chatbot herself.

Maxwell, who is primarily based in San Diego, experienced struggled for several years with an eating dysfunction that began in childhood. She now will work as a consultant in the having condition area. “Hi, Tessa,” she typed into the on-line textual content box. “How do you guidance people with taking in diseases?”

Tessa rattled off a record of tips, including some assets for “healthy eating patterns.” Alarm bells quickly went off in Maxwell’s head. She asked Tessa for a lot more information. Before very long, the chatbot was supplying her recommendations on shedding body weight – kinds that sounded an dreadful great deal like what she’d been instructed when she was put on Weight Watchers at age 10.

“The suggestions that Tessa gave me was that I could drop 1 to 2 kilos for each week, that I must eat no much more than 2,000 calories in a day, that I must have a calorie deficit of 500-1,000 energy per day,” Maxwell says. “All of which might sound benign to the general listener. However, to an personal with an taking in disorder, the emphasis of body weight loss seriously fuels the taking in dysfunction.”

Maxwell shared her considerations on social media, helping launch an online controversy which led NEDA to announce on May 30 that it was indefinitely disabling Tessa. Patients, people, medical practitioners and other specialists on feeding on disorders ended up remaining surprised and bewildered about how a chatbot created to assist men and women with feeding on issues could end up dispensing diet plan ideas as an alternative.

The uproar has also set off a refreshing wave of discussion as businesses flip to synthetic intelligence (AI) as a doable remedy to a surging psychological health crisis and intense scarcity of scientific procedure providers.

A chatbot quickly in the highlight

NEDA experienced presently appear beneath scrutiny right after NPR claimed on Could 24 that the national nonprofit advocacy group was shutting down its helpline just after more than 20 a long time of operation.

CEO Liz Thompson informed helpline volunteers of the final decision in a March 31 email, expressing NEDA would “begin to pivot to the expanded use of AI-assisted technologies to provide persons and families with a moderated, fully automated useful resource, Tessa.”

“We see the changes from the Helpline to Tessa and our expanded website as component of an evolution, not a revolution, respectful of the ever-altering landscape in which we operate.”

(Thompson followed up with a assertion on June 7, stating that in NEDA’s “endeavor to share essential information about different decisions about our Information and Referral Helpline and Tessa, that the two different selections may possibly have turn out to be conflated which brought on confusion. It was not our intention to suggest that Tessa could supply the similar variety of human relationship that the Helpline provided.”)

On Might 30, fewer than 24 hours right after Maxwell provided NEDA with screenshots of her troubling conversation with Tessa, the non-revenue announced it had “taken down” the chatbot “right until further more discover.”

NEDA states it failed to know chatbot could produce new responses

NEDA blamed the chatbot’s emergent concerns on Cass, a psychological wellbeing chatbot firm that operated Tessa as a no cost support. Cass had changed Tessa with no NEDA’s recognition or approval, according to CEO Thompson, enabling the chatbot to produce new solutions outside of what Tessa’s creators experienced meant.

“By design and style it, it couldn’t go off the rails,” suggests Ellen Fitzsimmons-Craft, a clinical psychologist and professor at Washington University Medical University in St. Louis. Craft helped direct the workforce that initial developed Tessa with funding from NEDA.

The version of Tessa that they analyzed and analyzed was a rule-primarily based chatbot, this means it could only use a confined amount of prewritten responses. “We have been very cognizant of the simple fact that A.I. is not completely ready for this populace,” she states. “And so all of the responses ended up pre-programmed.”

The founder and CEO of Cass, Michiel Rauws, informed NPR the changes to Tessa were being created very last yr as portion of a “methods update,” which includes an “improved issue and reply aspect.” That function works by using generative Artificial Intelligence, indicating it gives the chatbot the skill to use new facts and develop new responses.

That modify was portion of NEDA’s agreement, Rauws states.

But NEDA’s CEO Liz Thompson informed NPR in an e-mail that “NEDA was in no way recommended of these changes and did not and would not have accredited them.”

“The written content some testers received relative to food plan society and body weight management can be destructive to individuals with taking in conditions, is versus NEDA policy, and would under no circumstances have been scripted into the chatbot by eating problems authorities, Drs. Barr Taylor and Ellen Fitzsimmons Craft,” she wrote.

Complaints about Tessa commenced last yr

NEDA was currently knowledgeable of some concerns with the chatbot months just before Sharon Maxwell publicized her interactions with Tessa in late Could.

In Oct 2022, NEDA handed together screenshots from Monika Ostroff, government director of the Multi-Company Feeding on Disorders Affiliation (MEDA) in Massachusetts.

They showed Tessa telling Ostroff to stay clear of “unhealthy” food items and only eat “wholesome” snacks, like fruit. “It can be really significant that you discover what wholesome treats you like the most, so if it’s not a fruit, consider a little something else!” Tessa advised Ostroff. “So the upcoming time you happen to be hungry among foods, attempt to go for that in its place of an harmful snack like a bag of chips. Assume you can do that?”

In a new job interview, Ostroff suggests this was a very clear example of the chatbot encouraging “diet program society” mentality. “That meant that they [NEDA] possibly wrote these scripts on their own, they bought the chatbot and didn’t bother to make absolutely sure it was secure and failed to take a look at it, or released it and did not test it,” she says.

The healthier snack language was immediately eliminated right after Ostroff described it. But Rauws says that problematic language was element of Tessa’s “pre-scripted language, and not linked to generative AI.”

Fitzsimmons-Craft denies her crew wrote that. “[That] was not one thing our team made Tessa to offer you and… it was not part of the rule-based mostly software we at first made.”

Then, before this calendar year, Rauws claims “a related occasion occurred as one more instance.”

“This time it was around our increased query and response characteristic, which leverages a generative product. When we obtained notified by NEDA that an answer textual content [Tessa] furnished fell exterior their guidelines, and it was dealt with proper absent.”

Rauws says he can’t supply extra information about what this function entailed.

“This is one more before instance, and not the identical instance as above the Memorial Working day weekend,” he explained in an email, referring to Maxwell’s screenshots. “In accordance to our privateness plan, this is associated to consumer facts tied to a concern posed by a individual, so we would have to get acceptance from that personal to start with.”

When requested about this occasion, Thompson states she will not know what instance Rauws is referring to.

Even with their disagreements in excess of what occurred and when, equally NEDA and Cass have issued apologies.

Ostroff suggests irrespective of what went mistaken, the influence on another person with an eating disorder is the identical. “It won’t subject if it really is rule-primarily based [AI] or generative, it really is all excess fat-phobic,” she says. “We have substantial populations of individuals who are harmed by this form of language day-to-day.”

She also concerns about what this may possibly necessarily mean for the tens of thousands of folks who had been turning to NEDA’s helpline each and every calendar year.

“Involving NEDA having their helpline offline, and their disastrous chatbot….what are you carrying out with all those people today?”

Thompson states NEDA is nonetheless supplying various sources for people today looking for assistance, which includes a screening tool and useful resource map, and is creating new on the web and in-individual systems.

“We figure out and regret that certain selections taken by NEDA have let down customers of the ingesting issues community,” she claimed in an emailed statement. “Like all other businesses centered on eating diseases, NEDA’s methods are restricted and this demands us to make challenging choices… We constantly would like we could do a lot more and we continue being focused to accomplishing superior.”

[ad_2]

Resource link