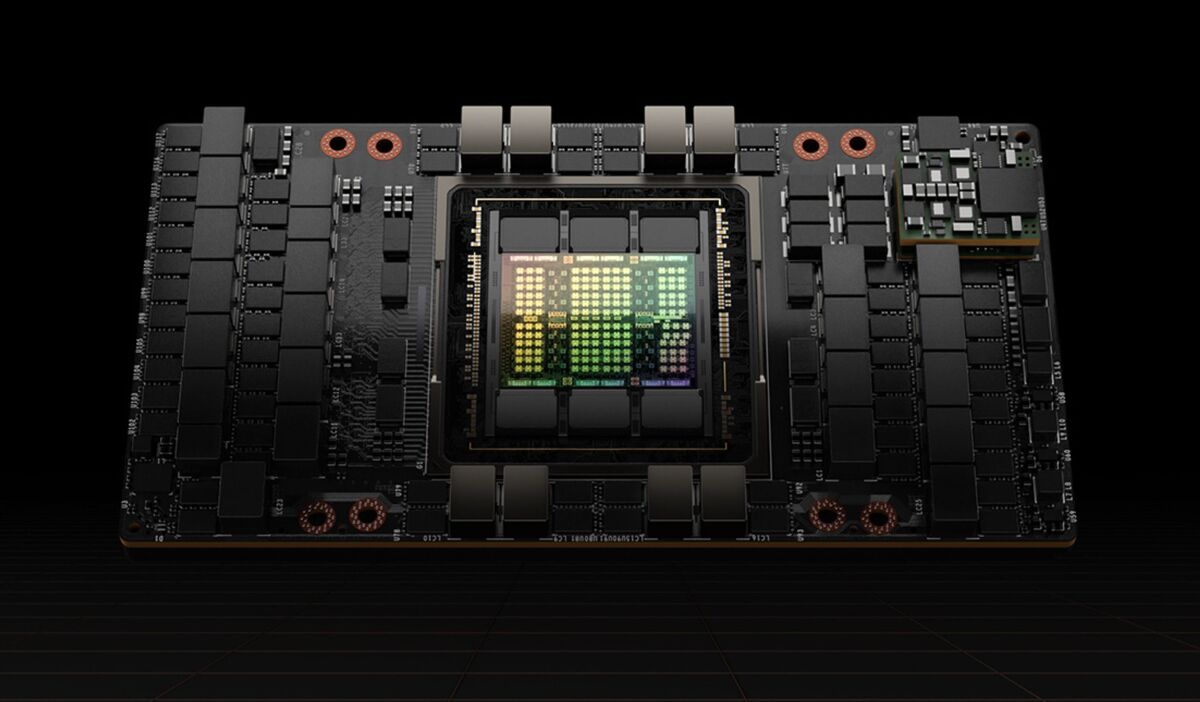

Inside of Nvidia’s new AI supercomputer

[ad_1]

With Nvidia’s Arm-primarily based Grace processor at its core, the organization has launched a supercomputer developed to accomplish AI processing run by a CPU/GPU combination.

The new process, formally introduced at the Computex tech meeting in Taipei the DGX GH200 supercomputer is powered by 256 Grace Hopper Superchips, know-how that is a mix of Nvidia’s Grace CPU, a 72-main Arm processor built for higher-functionality computing and the Hopper GPU. The two are related by Nvidia’s proprietary NVLink-C2C superior-velocity interconnect.

The DGX GH200 attributes a substantial shared memory space of more than 144TB of HBM3 memory connected by its NVLink-C2C interconnect technologies. The process is a simplified style and design, and its processors are seen by thier software program as as one huge GPU with 1 giant memory pool, claimed Ian Buck, vice president and standard manager of Nvidia’s hyperscale and HPC enterprise unit.

He reported the system can be deployed and skilled with Nvidia’s assist in AI models that can need memory outside of the bounds of what a single GPU supports. “We will need a entirely new process architecture that can split by one terabyte of memory in purchase to practice these big versions,” he said.

Nvidia statements an exaFLOP of overall performance, but that is from eight-bit FP8 processing. Now the bulk of AI processing is staying carried out working with 16-little bit Bfloat16 guidance, which would just take twice as long. One way of seeking at it is you could have a supercomputer that ranks in the best 10 of the Leading500 supercomputer record and occupy a comparatively modest room.

By using NVLink alternatively of typical PCI Express interconnects, the bandwidth between GPU and CPU is seven periods more quickly and requires a fifth of the interconnect electrical power.

Google Cloud, Meta, and Microsoft are between the very first predicted to achieve accessibility to the DGX GH200 to examine its abilities for generative AI workloads. Nvidia also intends to deliver the DGX GH200 design and style as a blueprint to cloud provider providers and other hyperscalers so they can further customise it for their infrastructure. Nvidia DGX GH200 supercomputers are predicted to be offered by the close of the year.

Software package is included.

These supercomputers arrive with Nvidia computer software installed to offer a turnkey product or service that consists of Nvidia AI Enterprise, the key computer software layer for its AI platform that includes frameworks, pretrained designs, and improvement applications and Base Command for company-level cluster management.

DGX GH200 is the 1st supercomputer to pair Grace Hopper Superchips with Nvidia’s NVLink Change Technique, the interconnect that allows the GPUs in the system to perform together as just one. The preceding technology process maxed out at eight GPUs performing in tandem.

To get to the entire-sized procedure continue to calls for important information-center serious estate. Every single 15 rack-unit chassis retains eight compute nodes, and there are two chassis for each rack (or pod in Nvidia parlance) together with NVswitch ethernet and IP connectivity. Up to 8 of the pods can be linked for up to 256 processors.

The method is air cooled even with the point that Hopper GPUs draw 700 Watts of energy, which signifies appreciable warmth. Nvidia reported that it is internally establishing liquid-cooled methods and is chatting about it with prospects and partners, but for now the DGX GH200 is cooled by lovers.

So far, potental customers of the system are not prepared for liquid cooling, stated Charlie Boyle, vice president of DGX techniques at Nvidia. “There will be details in the long run wherever we’ll have layouts that have to be liquid cooled, but we were being equipped to continue to keep this 1 on air,” he mentioned.

Nvidia announced at Computex that the Grace Hopper Superchip is in comprehensive creation. Methods from OEM companions are anticipated to be sent later on this 12 months.

Copyright © 2023 IDG Communications, Inc.

[ad_2]

Supply link